2DCV Python Implementation

III. Robust Principal Component Analysis

Robust Principal Component Analysis (RPCA) is used to separate a given data matrix into two distinct matrices: one representing the smooth component, and the other capturing the sparse component.

Definition

Suppose a noisy matrix, denoted as D∈Rh×w, can be divided into two components: a clean part represented by a low-rank matrix A, and a noisy part captured by a sparse matrix E. The problem of RPCA is to recover the low-rank matrix A and the sparse matrix E from the noisy matrix D. The optimization problem can be formulated as follows.

A,Emin∥A∥∗+λ∥E∥1s.t.D=A+E

Exact ALM Method

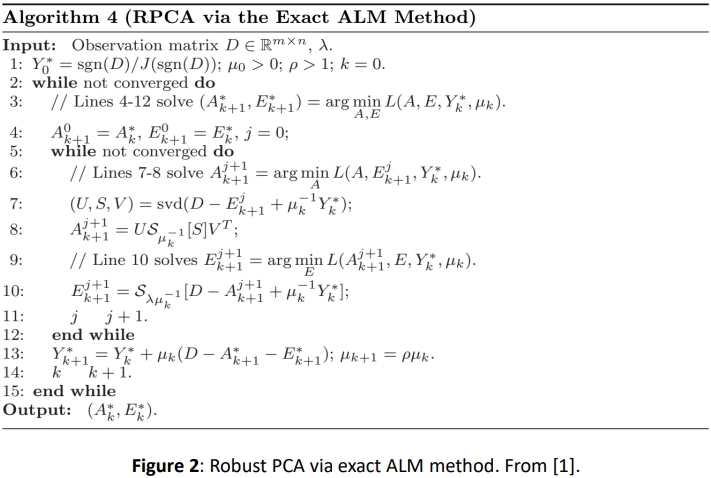

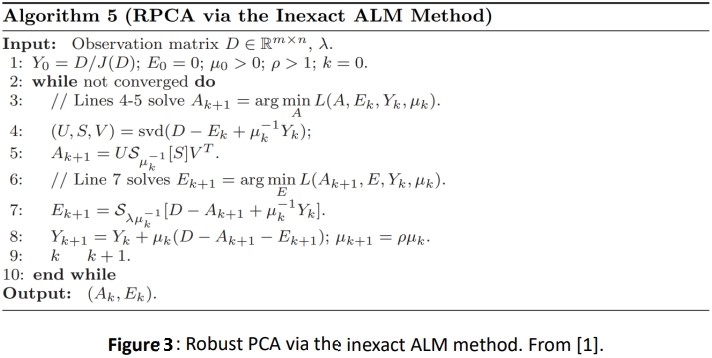

To address the optimization problem, we can employ the Augmented Lagrange Multipliers (ALM) method as outlined in [1]. For a comprehensive explanation of the algorithm, please refer to the original article. Figure 2 illustrates the robust PCA via the exact ALM method.

The corresponding Python implementation is shown below.

# Robust PCA via the Exact ALM Method

def RPCA(D, l, mu, rho):

signD = np.sign(D)

Y = signD / np.maximum(np.linalg.norm(signD, ord=2, axis=(0,1)), 1/l*np.linalg.norm(signD, ord=np.inf, axis=(0,1)))

A = np.zeros(D.shape)

E = np.zeros(D.shape)

A_prev = np.zeros(D.shape)

E_prev = np.zeros(D.shape)

for k in range(500):

# Solve A and E

for j in range(500):

# Solve A

U, S, Vh = np.linalg.svd(D - E + Y/mu, full_matrices=False)

A = U @ np.diag(np.where(S > 1/mu, S - 1/mu, np.where(S < -1/mu, S + 1/mu, 0))) @ Vh

# Solve E

Q = D - A + Y/mu

E = np.where(Q > l/mu, Q - l/mu, np.where(Q < -l/mu, Q + l/mu, 0))

# Convergence condition

if np.linalg.norm(A - A_prev, ord='fro') / np.linalg.norm(D, ord='fro') < 1e-5

and np.linalg.norm(E - E_prev, ord='fro') / np.linalg.norm(D, ord='fro') < 1e-5:

break

A_prev = A

E_prev = E

# Update Y and mu

Y = Y + mu*(D - A - E)

mu = rho*mu

# Convergence condition

if np.linalg.norm(D - A - E, ord='fro') / np.linalg.norm(D, ord='fro') < 1e-7:

break

return A, E

Inexact ALM Method

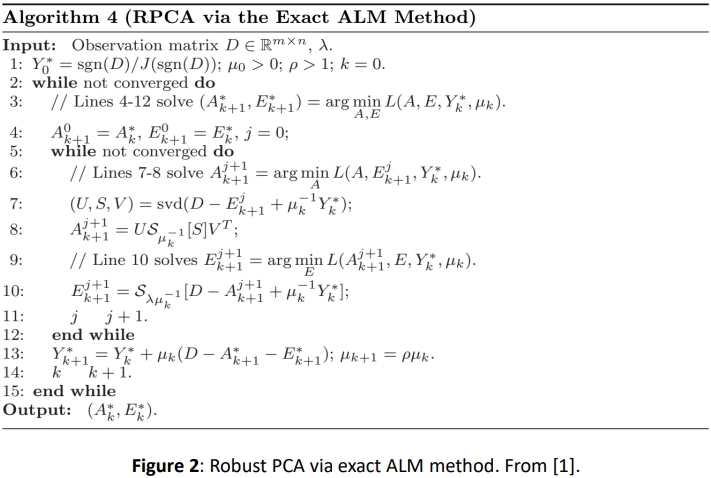

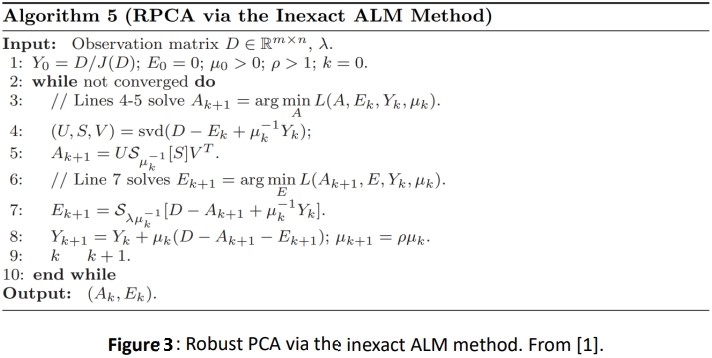

Alternatively, we can employ the inexact version of the ALM method by removing the inner loop present in the exact version. According to [1], it has been observed that A and E can still converge to an optimal solution without precisely solving the subproblem within the inner loop. Just a single step is sufficient for convergence. The detailed proof can be found in the original article. Figure 3 illustrates the algorithm.

The corresponding Python implementation is shown below.

# Robust PCA via the Inexact ALM Method

def RPCA_inexact(D, l, mu, rho):

Y = D / np.maximum(np.linalg.norm(D, ord=2, axis=(0,1)), 1/l*np.linalg.norm(D, ord=np.inf, axis=(0,1)))

A = np.zeros(D.shape)

E = np.zeros(D.shape)

A_prev = np.zeros(D.shape)

E_prev = np.zeros(D.shape)

for k in range(500):

# Solve A

U, S, Vh = np.linalg.svd(D - E + Y/mu, full_matrices=False)

A = U @ np.diag(np.where(S > 1/mu, S - 1/mu, np.where(S < -1/mu, S + 1/mu, 0))) @ Vh

# Solve E

Q = D - A + Y/mu

E = np.where(Q > l/mu, Q - l/mu, np.where(Q < -l/mu, Q + l/mu, 0))

# Update Y and mu

Y = Y + mu*(D - A - E)

mu = rho*mu

# Convergence condition

if np.linalg.norm(D - A - E, ord='fro') / np.linalg.norm(D, ord='fro') < 1e-7:

break

return A, E

Reference

[1] Zhouchen Lin, Minming Chen, and Yi Ma, The Augmented Lagrange Multiplier

Method for Exact Recovery of Corrupted Low-Rank Matrices, arXiv:1009.5055, 2010.

Note

The above content is a brief introduction to the RPCA algorithm. For more details on its application, please refer to my report.